How containers changed the world, twice!

Tags:

container docker virtualizationDescription:

An interesting analogy between the two seemingly unrelated types of containers, with a touch on container orchestration.

The world before containers

Cargo transportation over the sea was a mess

Before the containers, for centuries, people had been transporting all sorts of goods over the sea. According to McContainers, the goods were often held at warehouses in a port then manually loaded onto ships whenever one was available. Without containers, the goods had to be packed in barrels, boxes and sacks, which could take millions different sizes and shapes. The loading and unloading processes of those goods, were space-inefficient, costly, and time-consuming.

The problem did not only come from these loading processes, but also the transportation itself. Without containers, goods often fell all over the place and got mixed up together. The on-ship space was used very inefficiently due to the inability of stacking goods next to and on to each other. Imagine a ship that carried coal and rice at the same time without clear separation, it definitely was a mess.

Resources allocation between applications on servers was in chaos

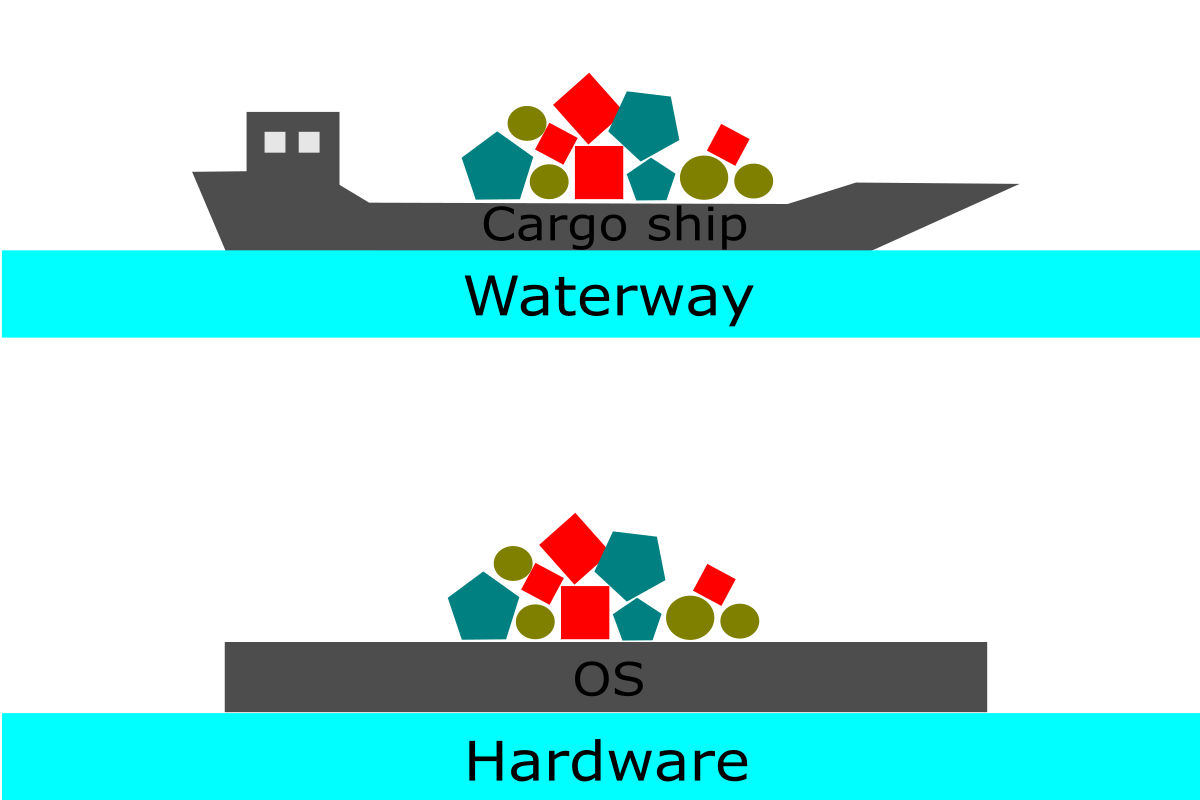

In earlier stages of the internet, each physical server had one Operating System (OS) to manages its resources, and applications were installed directly on the OS itself. Because each application was often unique and required different dependencies to run, it usually took a lot of time and efforts to configure everything right when installing the applications. Similarly, the problem also occurred with uninstallations. Ideally, we should remove from the servers everything not needed anymore, however, it was a big problem to determine what was really not needed and what were still important for other applications to run.

Again, problems did not only come from these installation processes, but also the operation itself. Since the applications came with vastly different sizes and resource requirements, there was no way for the OS to define a clear resource boundary for each application. As a result, from time to time, an application could take mostly all available resources, leaving other applications underperformed or even freezed.

lol

The first attempt to fix the problems

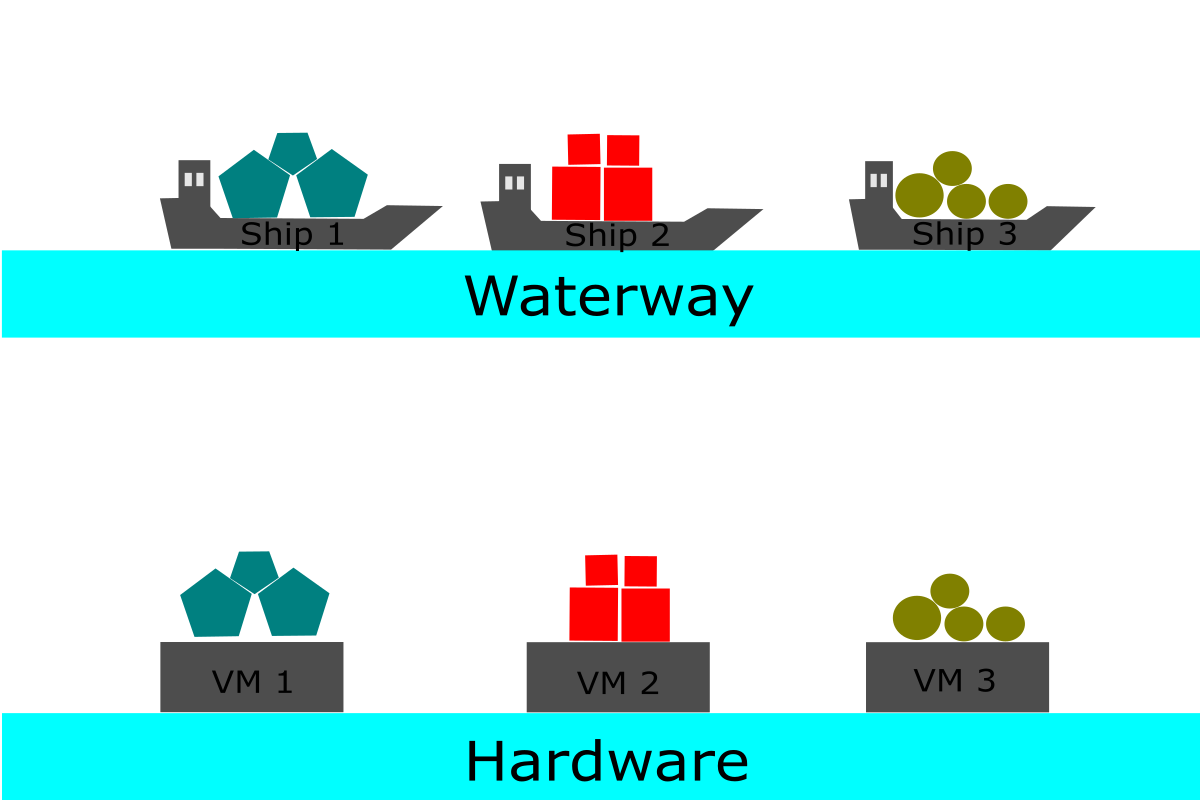

Using many smaller cargo ships

Loading all sorts of goods onto a big cargo ship was not the most brilliant idea; so, naturally, a solution was to use many smaller ships, each ship only carried some certain types of goods. This way, every type of cargo would get its own space and not interfere with each other, making it less a hassle to load, unload and transport the cargos.

However, this came with the problem of wasted resources, where the Economies of Scale applied. The price to make a very big ship was most of the time lower than many small ships with the same total capacity. That was because in big ships, many essential components, especially the "ship orchestrator" ones, such as the bridge and cabins, only needed to be produced once, instead of having to make them again and again with similar costs for many small ships. As a result, when using a fleet of tiny ships, many essential ships components could not be reused, leading to a waste in resources.

Creating "mini machines" by using virtualization

After all the chaos, virtualization was introduced to better manage applications on servers. Instead of running a single machine with an OS, virtualization allowed multiple Virtual Machines (VMs) to run at the same time on a physical server. By dividing a physical machine into many virtual machines, resources allocation and isolation between applications had become a lot easier.

The problem, again, came with wasted resources. VMs are time consuming to build, especially in large number, because they ran a full OS and required a full configuration for that OS. In addition, VMs with their OSes can take up a lot of hard disk and RAM spaces to run the OSes, leading to memory shortages, compared to running a single OS to orchestrate everything.

Containers came into play to save the day

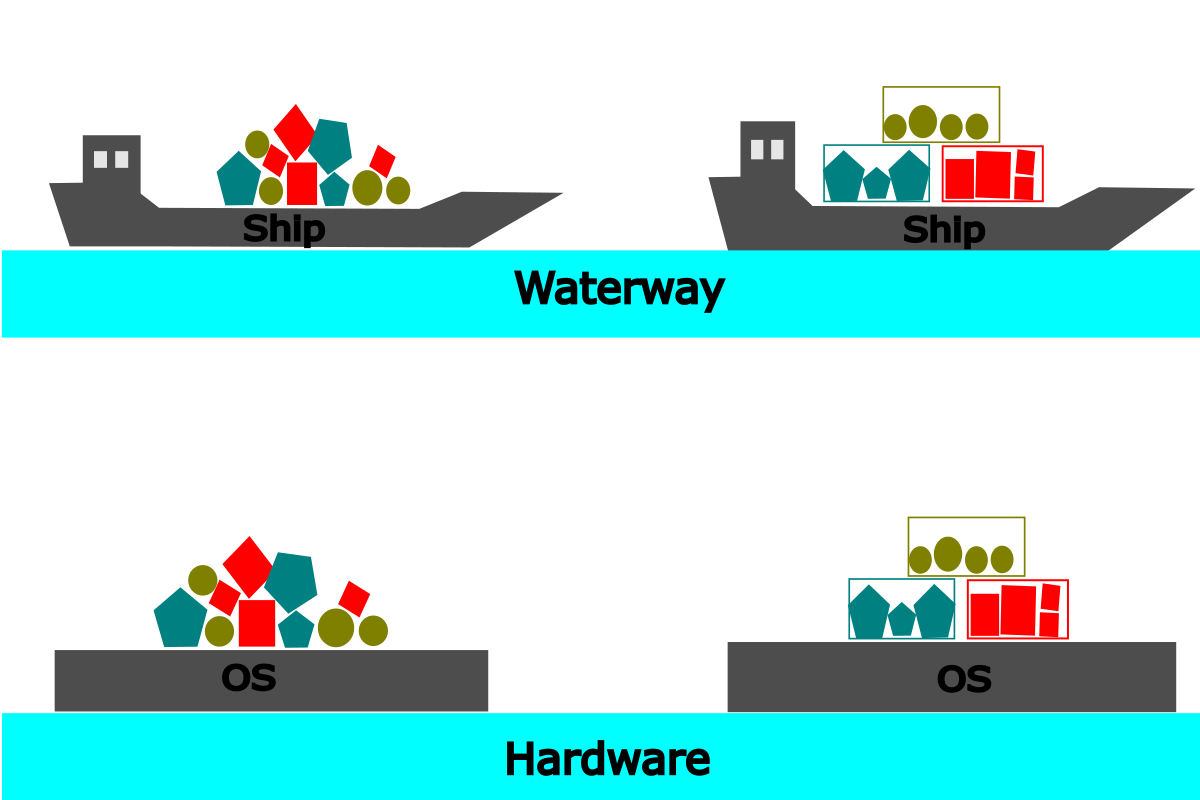

Shipping container allowed efficient cargo transportation.

To combine the cost-effectiveness of only using a huge ship, and the convenience of keeping the goods separated, the container was born. Containers could be imagined as small ships carrying cargo, without all the unnecessary parts of a ship. Then these small "ships" are stacked onto a large real ship that does all the mechanical works to transport all the goods to its final location.

Because containers are basically boxes containing goods with standardize sizes, they are lightweight, cheap, easy and fast to move around. They also do not require any extra "dependencies". For example, when we want to transport clothes, all we need is to put them into a container with dehumidifier installed, then everything is ready to go. The sailors do not need to install any special equipment to keep the clothes from being wet during the transportation, even under bad weather conditions.

Overall, due to all those advantages, it is safe to assume that the logistics industry would be a lot less efficient without the invention of the container.

Application containers allowed efficient application deployment.

In this context, containers are similar to VMs, having its own file system holding all the dependencies required to run the application, its own share of computing resources; but instead of using its own OS, containers share the same OS with other applications. This OS is similar to the "big ship" in the example above, where it does all the mechanical works to run its containers.

Because containers do not run a full OS, it is lightweight, and very fast to modify and run. They also do not require any extra "dependencies". Everything needed to run the application has been "containerized" already, and we do not need to install anything extra into the system, keeping system maintenance work considerably easier.

Because of multiple advantages, similar to the case of the cargo containers, the application containers are expected to revolutionize the internet. Indeed, some may say they have done that already.

The next evolution: Container orchestration

In the past, both cargo and application containers are managed by humans. As a result, things were very slow back then. For cargos, port masters had to do all the calculation and optimizations to decide how many containers to put on a ship, how to stack them efficiently, and another quadrillion factors. Server managers also faced the same problems due to the hassles of how to deploy, connect, and integrate the containers effectively on servers.

Cargo ports attempted to solve this by using robots and management software. However, according to McKinsey, the effectiveness of these automation tools is not always guaranteed, and the tasks in ports now are still largely run by humans.

This is where the first real difference between the cargo container and the application container comes in. While the traditional ports are facing difficulties, the "internet port", as known as servers, are increasingly well adopting automation tools to deploy and manage their containers.

The tasks of automating the processes within a container lifecycle is generally referred to as "container orchestration". Some of the most famous tools for this task are Red Hat OpenShift, Apache Mesos, and the industry-standard Kubernetes.

Each tool is different from each other, however, generally, according to Kubernetes, they provide to server managers (or more commonly, DevOps engineers - Developer and Operation engineers) the ability to automate service discovery, load balancing, storage orchestration, rollouts, rollbacks, bin packing, self-healing, and secret and configuration management.

Containers and container orchestration tools have revolutionized they way applications were deployed on servers over the internet. Without the containers, we would not be able to achieve "the internet" at the scale we have today.